Date: Mon, 13 Mar 2006 22:55:25 +0100

Hi Nick,

I looked at your example, and was not able to reproduce the problem automatically with the CVS HEAD version of root. (I got it only a few times, probably depending on the random seed, changing the number of epoch changed the behavior.)

Looking at your test macro, I also noticed the following: - you have twice more type==1 than type==0. Using a weight in that case can help producing more symmetric distributions. - you might also benefit from the developments by Andrea, by putting a "!" in the last layer.

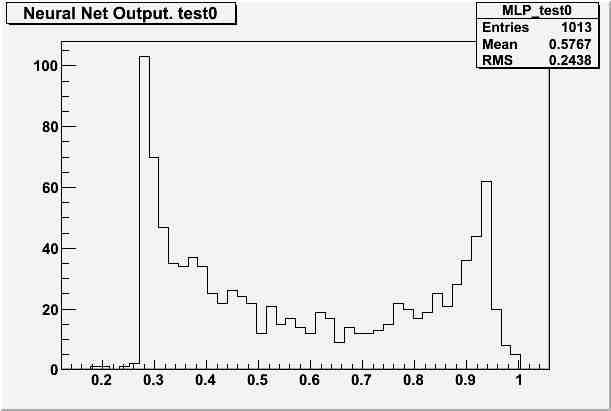

I attached a modified version of your test, together with the resulting distribution.

Cheers,

Christophe.

On Wednesday 8 March 2006 19:20, Rene Brun wrote:

> Hi Nick, Christophe, Andrea,

>

> TMultiLayerPerceptron was "upgraded" by Christophe and Andrea

> in version 5.10 in January.

> See the comments in the development notes at

> http://root.cern.ch/root/html/examples/V5.10.txt.html

> see CVS change log of:

> 2006-01-09 16:47 brun

> 2006-01-13 10:10 brun

>

> I expect that Christophe or/and Andrea will comment.

>

> Rene Brun

>

>

> On Wed, 8 Mar

>

> 2006, Nick West wrote:

> > Dear RootTalk,

> >

> > We are using TMultiLayerPerceptron but recently its behaviour has

> > changed in ways that we don't understand: fits the previously worked

> > now give degenerate results.

> >

> > The network we are using has

> >

> > o 3 input neurons: trkPHperPlane

> > eventPlanes

> > shwPHperStrip

> >

> > o One hidden layer with 5 neurons

> >

> > o 1 output neuron: type

> >

> > We have a TTree called inTree containing the branches: trkPHperPlane,

> > eventPlanes, shwPHperStrip and type. We take 4 out of every 5 events

> > in the TTree for training purposes, so our constructor is:-

> >

> > TMultiLayerPerceptron("@trkPHperPlane, @eventPlanes,

> > @shwPHperStrip:5:type",

> > inTree,

> > "Entry$%5",

> > "!(Entry$%5)");

> >

> > We have been using the default training method (kBFGS - Broyden,

> > Fletcher, Goldfarb, Shanno). We noticed the problem when migrating from

> > ROOT 4.04/02 to 5.08/00. Sometimes the resulting net became quasi

> > degenerate - giving basically the same output for any input. In

> > exploring the problem we discovered that changing the method:-

> >

> > SetLearningMethod(TMultiLayerPerceptron::kStochastic)

> >

> > enhanced the effect. With 4.04/02 the fit gives a plausible network

> > every time, but with 5.08/00 it is almost always degenerate.

> >

> > To demonstrate the problem we have a tar file containing:-

> >

> > exampleTree.root

> > testMlp.C

> >

> > It can be found at:-

> >

> >

> > http://www-pnp.physics.ox.ac.uk/~west/minos/TMultiLayerPerceptron_proble

> > m.tar.gz

> >

> > Unwind the tar file and:-

> >

> > root -b -q testMlp.C

> >

> > testMlp.C consists of:-

> >

> >

> > {

> >

> > gSystem->Load("libMLP");

> >

> > TFile input("exampleTree.root");

> > TTree *inTree = input.Get("smallTree");

> >

> > TMultiLayerPerceptron *mlp = new

> > TMultiLayerPerceptron("@trkPHperPlane, @eventPlanes,

> > @shwPHperStrip:5:type", inTree, "Entry$%5","!(Entry$%5)");

> > mlp->SetLearningMethod(TMultiLayerPerceptron::kStochastic);

> > mlp->Train(50,"text,update=10");

> > mlp->DrawResult(0,"test");

> > cout << "Test set distribution. Mean : " << MLP_test0.GetMean() << "

> > RMS " << MLP_test0.GetRMS() << endl;

> >

> > }

> >

> > After defining and training the net, the results of applying to the

> > test set is displayed and then, using the wonders of CINT, the mean

> > and RMS of that histogram are displayed.

> >

> > Here are some typical results from 4.04/02

> >

> > Test set distribution. Mean : 0.73054 RMS 0.212484

> > Test set distribution. Mean : 0.93671 RMS 0.241446

> > Test set distribution. Mean : 0.590702 RMS 0.231579

> > Test set distribution. Mean : 0.727839 RMS 0.167396

> >

> > Contrast this with the results from 5.08/00

> >

> > Test set distribution. Mean : 0.49273 RMS 0.0481377

> > Test set distribution. Mean : 0.515413 RMS 0.0805224

> > Test set distribution. Mean : 0.9872 RMS 0.0317544

> > Test set distribution. Mean : 0.891544 RMS 0.0351175

> >

> > Notice the much smaller RMS showing that the distribution has

> > collapsed to a narrow peak. The same behaviour persists to the

> > lastest ROOT as of last night.

> >

> > TMultiLayerPerceptron has changed significantly since 4.04/02, have

> > we missed a significant change in usage?

> >

> > Our platform is Scientific Linux SL Release 3.0.4 (SL)

> > with gcc version 3.2.3

> >

> > Thanks,

> >

> > Nick West.

-- +-----------------------------------------------------------\|/---+ | Christophe DELAERE office: e253 !o o! | | UCL - FYNU phone : 32-(0)10-473234 ! i ! | | chemin du cyclotron, 2 fax : 32-(0)10-452183 `-' | | 1348 Louvain-la-Neuve BELGIUM e-mail: delaere_at_fynu.ucl.ac.be | +-----------------------------------------------------------------+Received on Mon Mar 13 2006 - 22:55:50 MET

- text/x-c++src attachment: testMlp.C