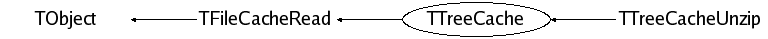

class TTreeCache: public TFileCacheRead

TTreeCache A specialized TFileCacheRead object for a TTree This class acts as a file cache, registering automatically the baskets from the branches being processed (TTree::Draw or TTree::Process and TSelectors) when in the learning phase. The learning phase is by default 100 entries. It can be changed via TTreeCache::SetLearnEntries. This cache speeds-up considerably the performance, in particular when the Tree is accessed remotely via a high latency network. The default cache size (10 Mbytes) may be changed via the function TTreeCache::SetCacheSize Only the baskets for the requested entry range are put in the cache For each Tree being processed a TTreeCache object is created. This object is automatically deleted when the Tree is deleted or when the file is deleted. -Special case of a TChain Once the training is done on the first Tree, the list of branches in the cache is kept for the following files. -Special case of a TEventlist if the Tree or TChain has a TEventlist, only the buffers referenced by the list are put in the cache. The learning period is started or restarted when: - TTree::SetCache is called for the first time. - TTree::SetCache is called a second time with a different size. - TTreeCache::StartLearningPhase is called. - TTree[Cache]::SetEntryRange is called * and the learning is not yet finished * and has not been set to manual * and the new minimun entry is different. The learning period is stopped (and prefetching is actually started) when: - TTree[Cache]::StopLearningPhase is called. - An entry outside the 'learning' range is requested The 'learning range is from fEntryMin (default to 0) to fEntryMin + fgLearnEntries (default to 100). - A 'cached' TChain switches over to a new file. WHY DO WE NEED the TreeCache when doing data analysis? When writing a TTree, the branch buffers are kept in memory. A typical branch buffersize (before compression) is typically 32 KBytes. After compression, the zipped buffer may be just a few Kbytes. The branch buffers cannot be much larger in case of Trees with several hundred or thousand branches. When writing, this does not generate a performance problem because branch buffers are always written sequentially and the OS is in general clever enough to flush the data to the output file when a few MBytes of data have to be written. When reading at the contrary, one may hit a performance problem when reading across a network (LAN or WAN) and the network latency is high. For example in a WAN with 10ms latency, reading 1000 buffers of 10 KBytes each with no cache will imply 10s penalty where a local read of the 10 MBytes would take about 1 second. The TreeCache will try to prefetch all the buffers for the selected branches such that instead of transfering 1000 buffers of 10 Kbytes, it will be able to transfer one single large buffer of 10 Mbytes in one single transaction. Not only the TreeCache minimizes the number of transfers, but in addition it can sort the blocks to be read in increasing order such that the file is read sequentially. Systems like xrootd, dCache or httpd take advantage of the TreeCache in reading ahead as much data as they can and return to the application the maximum data specified in the cache and have the next chunk of data ready when the next request comes. HOW TO USE the TreeCache A few use cases are discussed below. It is not simple to activate the cache by default (except case1 below) because there are many possible configurations. In some applications you know a priori the list of branches to read. In other applications the analysis loop calls several layers of user functions where it is impossible to predict a priori which branches will be used. This is probably the most frequent case. In this case ROOT I/O will flag used branches automatically when a branch buffer is read during the learning phase. The TreeCache interface provides functions to instruct the cache about the used branches if they are known a priori. In the examples below, portions of analysis code are shown. The few statements involving the TreeCache are marked with //<<< 1- with TTree::Draw the TreeCache is automatically used by TTree::Draw. The function knows which branches are used in the query and it puts automatically these branches in the cache. The entry range is also known automatically. 2- with TTree::Process and TSelectors You must enable the cache and tell the system which branches to cache and also specify the entry range. It is important to specify the entry range in case you process only a subset of the events, otherwise you run the risk to store in the cache entries that you do not need. --example 2a -- TTree *T = (TTree*)f->Get("mytree"); Long64_t nentries = T->GetEntries(); Int_t cachesize = 10000000; //10 MBytes T->SetCacheSize(cachesize); //<<< T->AddBranch("*",kTRUE); //<<< add all branches to the cache T->Process('myselector.C+"); //in the TSelector::Process function we read all branches T->GetEntry(i); -- ... here you process your entry --example 2b in the Process function we read a subset of the branches. Only the branches used in the first entry will be put in the cache -- TTree *T = (TTree*)f->Get("mytree"); //we want to process only the 200 first entries Long64_t nentries=200; int efirst= 0; int elast = efirst+nentries; Int_t cachesize = 10000000; //10 MBytes TTreeCache::SetLearnEntries(1); //<<< we can take the decision after 1 entry T->SetCacheSize(cachesize); //<<< T->SetCacheEntryRange(efirst,elast); //<<< T->Process('myselector.C+","",nentries,efirst); // in the TSelector::Process we read only 2 branches TBranch *b1 = T->GetBranch("branch1"); b1->GetEntry(i); if (somecondition) return; TBranch *b2 = T->GetBranch("branch2"); b2->GetEntry(i); ... here you process your entry -- 3- with your own event loop --example 3a in your analysis loop, you always use 2 branches. You want to prefetch the branch buffers for these 2 branches only. -- TTree *T = (TTree*)f->Get("mytree"); TBranch *b1 = T->GetBranch("branch1"); TBranch *b2 = T->GetBranch("branch2"); Long64_t nentries = T->GetEntries(); Int_t cachesize = 10000000; //10 MBytes T->SetCacheSize(cachesize); //<<< T->AddBranchToCache(b1,kTRUE); //<<<add branch1 and branch2 to the cache T->AddBranchToCache(b2,kTRUE); //<<< T->StopCacheLearningPhase(); //<<< for (Long64_t i=0;i<nentries;i++) { T->LoadTree(i); //<<< important call when calling TBranch::GetEntry after b1->GetEntry(i); if (some condition not met) continue; b2->GetEntry(i); if (some condition not met) continue; //here we read the full event only in some rare cases. //there is no point in caching the other branches as it might be //more economical to read only the branch buffers really used. T->GetEntry(i); .. process the rare but interesting cases. ... here you process your entry } -- --example 3b in your analysis loop, you always use 2 branches in the main loop. you also call some analysis functions where a few more branches will be read. but you do not know a priori which ones. There is no point in prefetching branches that will be used very rarely. -- TTree *T = (TTree*)f->Get("mytree"); Long64_t nentries = T->GetEntries(); Int_t cachesize = 10000000; //10 MBytes T->SetCacheSize(cachesize); //<<< T->SetCacheLearnEntries(5); //<<< we can take the decision after 5 entries TBranch *b1 = T->GetBranch("branch1"); TBranch *b2 = T->GetBranch("branch2"); for (Long64_t i=0;i<nentries;i++) { T->LoadTree(i); b1->GetEntry(i); if (some condition not met) continue; b2->GetEntry(i); //at this point we may call a user function where a few more branches //will be read conditionally. These branches will be put in the cache //if they have been used in the first 10 entries if (some condition not met) continue; //here we read the full event only in some rare cases. //there is no point in caching the other branches as it might be //more economical to read only the branch buffers really used. T->GetEntry(i); .. process the rare but interesting cases. ... here you process your entry } -- SPECIAL CASES WHERE TreeCache should not be activated When reading only a small fraction of all entries such that not all branch buffers are read, it might be faster to run without a cache. HOW TO VERIFY That the TreeCache has been used and check its performance Once your analysis loop has terminated, you can access/print the number of effective system reads for a given file with a code like (where TFile* f is a pointer to your file) printf("Reading %lld bytes in %d transactions\n",f->GetBytesRead(), f->GetReadCalls());

Function Members (Methods)

public:

| TTreeCache() | |

| TTreeCache(TTree* tree, Int_t buffersize = 0) | |

| virtual | ~TTreeCache() |

| void | TObject::AbstractMethod(const char* method) const |

| virtual void | AddBranch(TBranch* b, Bool_t subbranches = kFALSE) |

| virtual void | AddBranch(const char* branch, Bool_t subbranches = kFALSE) |

| virtual void | TObject::AppendPad(Option_t* option = "") |

| virtual void | TObject::Browse(TBrowser* b) |

| static TClass* | Class() |

| virtual const char* | TObject::ClassName() const |

| virtual void | TObject::Clear(Option_t* = "") |

| virtual TObject* | TObject::Clone(const char* newname = "") const |

| virtual Int_t | TObject::Compare(const TObject* obj) const |

| virtual void | TObject::Copy(TObject& object) const |

| virtual void | TObject::Delete(Option_t* option = "")MENU |

| virtual Int_t | TObject::DistancetoPrimitive(Int_t px, Int_t py) |

| virtual void | TObject::Draw(Option_t* option = "") |

| virtual void | TObject::DrawClass() constMENU |

| virtual TObject* | TObject::DrawClone(Option_t* option = "") constMENU |

| virtual void | TObject::Dump() constMENU |

| virtual void | TObject::Error(const char* method, const char* msgfmt) const |

| virtual void | TObject::Execute(const char* method, const char* params, Int_t* error = 0) |

| virtual void | TObject::Execute(TMethod* method, TObjArray* params, Int_t* error = 0) |

| virtual void | TObject::ExecuteEvent(Int_t event, Int_t px, Int_t py) |

| virtual void | TObject::Fatal(const char* method, const char* msgfmt) const |

| virtual Bool_t | FillBuffer() |

| virtual TObject* | TObject::FindObject(const char* name) const |

| virtual TObject* | TObject::FindObject(const TObject* obj) const |

| virtual Int_t | TFileCacheRead::GetBufferSize() const |

| virtual Option_t* | TObject::GetDrawOption() const |

| static Long_t | TObject::GetDtorOnly() |

| Double_t | GetEfficiency() |

| Double_t | GetEfficiencyRel() |

| virtual const char* | TObject::GetIconName() const |

| static Int_t | GetLearnEntries() |

| virtual const char* | TObject::GetName() const |

| virtual char* | TObject::GetObjectInfo(Int_t px, Int_t py) const |

| static Bool_t | TObject::GetObjectStat() |

| virtual Option_t* | TObject::GetOption() const |

| TTree* | GetOwner() const |

| virtual const char* | TObject::GetTitle() const |

| TTree* | GetTree() const |

| virtual UInt_t | TObject::GetUniqueID() const |

| virtual Int_t | TFileCacheRead::GetUnzipBuffer(char**, Long64_t, Int_t, Bool_t*) |

| virtual Bool_t | TObject::HandleTimer(TTimer* timer) |

| virtual ULong_t | TObject::Hash() const |

| virtual void | TObject::Info(const char* method, const char* msgfmt) const |

| virtual Bool_t | TObject::InheritsFrom(const char* classname) const |

| virtual Bool_t | TObject::InheritsFrom(const TClass* cl) const |

| virtual void | TObject::Inspect() constMENU |

| void | TObject::InvertBit(UInt_t f) |

| virtual TClass* | IsA() const |

| virtual Bool_t | TFileCacheRead::IsAsyncReading() const |

| virtual Bool_t | TObject::IsEqual(const TObject* obj) const |

| virtual Bool_t | TObject::IsFolder() const |

| virtual Bool_t | IsLearning() const |

| Bool_t | TObject::IsOnHeap() const |

| virtual Bool_t | TObject::IsSortable() const |

| Bool_t | TObject::IsZombie() const |

| virtual void | TObject::ls(Option_t* option = "") const |

| void | TObject::MayNotUse(const char* method) const |

| virtual Bool_t | TObject::Notify() |

| static void | TObject::operator delete(void* ptr) |

| static void | TObject::operator delete(void* ptr, void* vp) |

| static void | TObject::operator delete[](void* ptr) |

| static void | TObject::operator delete[](void* ptr, void* vp) |

| void* | TObject::operator new(size_t sz) |

| void* | TObject::operator new(size_t sz, void* vp) |

| void* | TObject::operator new[](size_t sz) |

| void* | TObject::operator new[](size_t sz, void* vp) |

| virtual void | TObject::Paint(Option_t* option = "") |

| virtual void | TObject::Pop() |

| virtual void | TFileCacheRead::Prefetch(Long64_t pos, Int_t len) |

| virtual void | TFileCacheRead::Print(Option_t* option = "") const |

| virtual Int_t | TObject::Read(const char* name) |

| virtual Int_t | ReadBuffer(char* buf, Long64_t pos, Int_t len) |

| virtual Int_t | TFileCacheRead::ReadBufferExt(char* buf, Long64_t pos, Int_t len, Int_t& loc) |

| virtual void | TObject::RecursiveRemove(TObject* obj) |

| void | TObject::ResetBit(UInt_t f) |

| virtual void | ResetCache() |

| virtual void | TObject::SaveAs(const char* filename = "", Option_t* option = "") constMENU |

| virtual void | TObject::SavePrimitive(basic_ostream<char,char_traits<char> >& out, Option_t* option = "") |

| void | TObject::SetBit(UInt_t f) |

| void | TObject::SetBit(UInt_t f, Bool_t set) |

| virtual void | TObject::SetDrawOption(Option_t* option = "")MENU |

| static void | TObject::SetDtorOnly(void* obj) |

| virtual void | SetEntryRange(Long64_t emin, Long64_t emax) |

| virtual void | TFileCacheRead::SetFile(TFile* file) |

| static void | SetLearnEntries(Int_t n = 10) |

| static void | TObject::SetObjectStat(Bool_t stat) |

| virtual void | TFileCacheRead::SetSkipZip(Bool_t = kTRUE) |

| virtual void | TObject::SetUniqueID(UInt_t uid) |

| virtual void | ShowMembers(TMemberInspector& insp, char* parent) |

| virtual void | TFileCacheRead::Sort() |

| void | StartLearningPhase() |

| virtual void | StopLearningPhase() |

| virtual void | Streamer(TBuffer& b) |

| void | StreamerNVirtual(TBuffer& b) |

| virtual void | TObject::SysError(const char* method, const char* msgfmt) const |

| Bool_t | TObject::TestBit(UInt_t f) const |

| Int_t | TObject::TestBits(UInt_t f) const |

| virtual void | UpdateBranches(TTree* tree, Bool_t owner = kFALSE) |

| virtual void | TObject::UseCurrentStyle() |

| virtual void | TObject::Warning(const char* method, const char* msgfmt) const |

| virtual Int_t | TObject::Write(const char* name = 0, Int_t option = 0, Int_t bufsize = 0) |

| virtual Int_t | TObject::Write(const char* name = 0, Int_t option = 0, Int_t bufsize = 0) const |

protected:

| virtual void | TObject::DoError(int level, const char* location, const char* fmt, va_list va) const |

| void | TObject::MakeZombie() |

private:

| TTreeCache(const TTreeCache&) | |

| TTreeCache& | operator=(const TTreeCache&) |

Data Members

public:

| enum TObject::EStatusBits { | kCanDelete | |

| kMustCleanup | ||

| kObjInCanvas | ||

| kIsReferenced | ||

| kHasUUID | ||

| kCannotPick | ||

| kNoContextMenu | ||

| kInvalidObject | ||

| }; | ||

| enum TObject::[unnamed] { | kIsOnHeap | |

| kNotDeleted | ||

| kZombie | ||

| kBitMask | ||

| kSingleKey | ||

| kOverwrite | ||

| kWriteDelete | ||

| }; |

protected:

| Bool_t | TFileCacheRead::fAsyncReading | |

| TList* | fBrNames | ! list of branch names in the cache |

| TObjArray* | fBranches | ! List of branches to be stored in the cache |

| char* | TFileCacheRead::fBuffer | [fBufferSize] buffer of contiguous prefetched blocks |

| Int_t | TFileCacheRead::fBufferLen | Current buffer length (<= fBufferSize) |

| Int_t | TFileCacheRead::fBufferSize | Allocated size of fBuffer (at a given time) |

| Int_t | TFileCacheRead::fBufferSizeMin | Original size of fBuffer |

| Long64_t | fEntryCurrent | ! current lowest entry number in the cache |

| Long64_t | fEntryMax | ! last entry in the cache |

| Long64_t | fEntryMin | ! first entry in the cache |

| Long64_t | fEntryNext | ! next entry number where cache must be filled |

| TFile* | TFileCacheRead::fFile | Pointer to file |

| Bool_t | fIsLearning | ! true if cache is in learning mode |

| Bool_t | fIsManual | ! true if cache is StopLearningPhase was used |

| Bool_t | TFileCacheRead::fIsSorted | True if fSeek array is sorted |

| Bool_t | TFileCacheRead::fIsTransferred | True when fBuffer contains something valid |

| Int_t* | TFileCacheRead::fLen | [fNb] Length of long buffers |

| Int_t | fNReadMiss | Number of blocks read and not found in the chache |

| Int_t | fNReadOk | Number of blocks read and found in the cache |

| Int_t | fNReadPref | Number of blocks that were prefetched |

| Int_t | TFileCacheRead::fNb | Number of long buffers |

| Int_t | fNbranches | ! Number of branches in the cache |

| Int_t | TFileCacheRead::fNseek | Number of blocks to be prefetched |

| Int_t | TFileCacheRead::fNtot | Total size of prefetched blocks |

| TTree* | fOwner | ! pointer to the owner Tree/chain |

| Long64_t* | TFileCacheRead::fPos | [fNb] start of long buffers |

| Long64_t* | TFileCacheRead::fSeek | [fNseek] Position on file of buffers to be prefetched |

| Int_t* | TFileCacheRead::fSeekIndex | [fNseek] sorted index table of fSeek |

| Int_t* | TFileCacheRead::fSeekLen | [fNseek] Length of buffers to be prefetched |

| Int_t* | TFileCacheRead::fSeekPos | [fNseek] Position of sorted blocks in fBuffer |

| Int_t | TFileCacheRead::fSeekSize | Allocated size of fSeek |

| Long64_t* | TFileCacheRead::fSeekSort | [fNseek] Position on file of buffers to be prefetched (sorted) |

| Int_t* | TFileCacheRead::fSeekSortLen | [fNseek] Length of buffers to be prefetched (sorted) |

| TTree* | fTree | ! pointer to the current Tree |

| Long64_t | fZipBytes | ! Total compressed size of branches in cache |

| static Int_t | fgLearnEntries | Number of entries used for learning mode |

Class Charts

Function documentation

void AddBranch(const char *bname, Bool_t subbranches /*= kFALSE*/)

Add a branch to the list of branches to be stored in the cache this is to be used by user (thats why we pass the name of the branch). It works in exactly the same way as TTree::SetBranchStatus so you probably want to look over ther for details about the use of bname with regular expresions. The branches are taken with respect to the Owner of this TTreeCache (i.e. the original Tree) NB: if bname="*" all branches are put in the cache and the learning phase stopped

Double_t GetEfficiency()

Give the total efficiency of the cache... defined as the ratio

of blocks found in the cache vs. the number of blocks prefetched

( it could be more than 1 if we read the same block from the cache more

than once )

Note: This should eb used at the end of the processing or we will

get uncomplete stats

Double_t GetEfficiencyRel()

This will indicate a sort of relative efficiency... a ratio of the reads found in the cache to the number of reads so far

Int_t GetLearnEntries()

static function returning the number of entries used to train the cache see SetLearnEntries

Int_t ReadBuffer(char* buf, Long64_t pos, Int_t len)

Read buffer at position pos. If pos is in the list of prefetched blocks read from fBuffer. Otherwise try to fill the cache from the list of selected branches, and recheck if pos is now in the list. Returns -1 in case of read failure, 0 in case not in cache, 1 in case read from cache. This function overloads TFileCacheRead::ReadBuffer.

void SetEntryRange(Long64_t emin, Long64_t emax)

Set the minimum and maximum entry number to be processed this information helps to optimize the number of baskets to read when prefetching the branch buffers.

void SetLearnEntries(Int_t n = 10)

Static function to set the number of entries to be used in learning mode The default value for n is 10. n must be >= 1

void StartLearningPhase()

The name should be enough to explain the method. The only additional comments is that the cache is cleaned before the new learning phase.

void StopLearningPhase()

This is the counterpart of StartLearningPhase() and can be used to stop the learning phase. It's useful when the user knows exactly what branches he is going to use. For the moment it's just a call to FillBuffer() since that method will create the buffer lists from the specified branches.

void UpdateBranches(TTree* tree, Bool_t owner = kFALSE)

Update pointer to current Tree and recompute pointers to the branches in the cache.

TTreeCache(const TTreeCache& )

TTreeCache& operator=(const TTreeCache& )