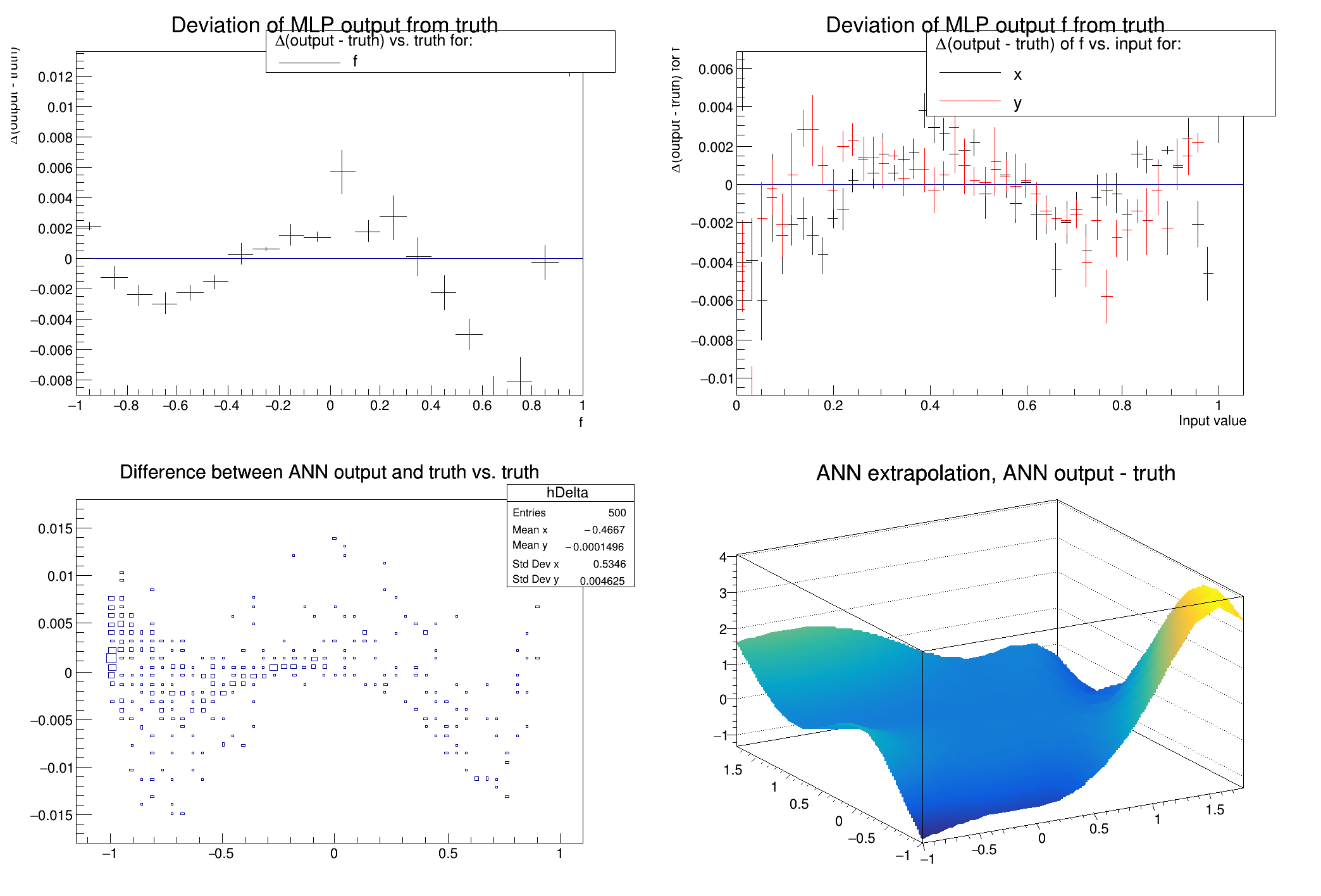

This macro shows the use of an ANN for regression analysis: given a set {i} of input vectors i and a set {o} of output vectors o, one looks for the unknown function f(i)=o.

For simplicity, we use a known function to create test and training data. In reality this function is usually not known, and the data comes e.g. from measurements.

Network with structure: x,y:10:8:f

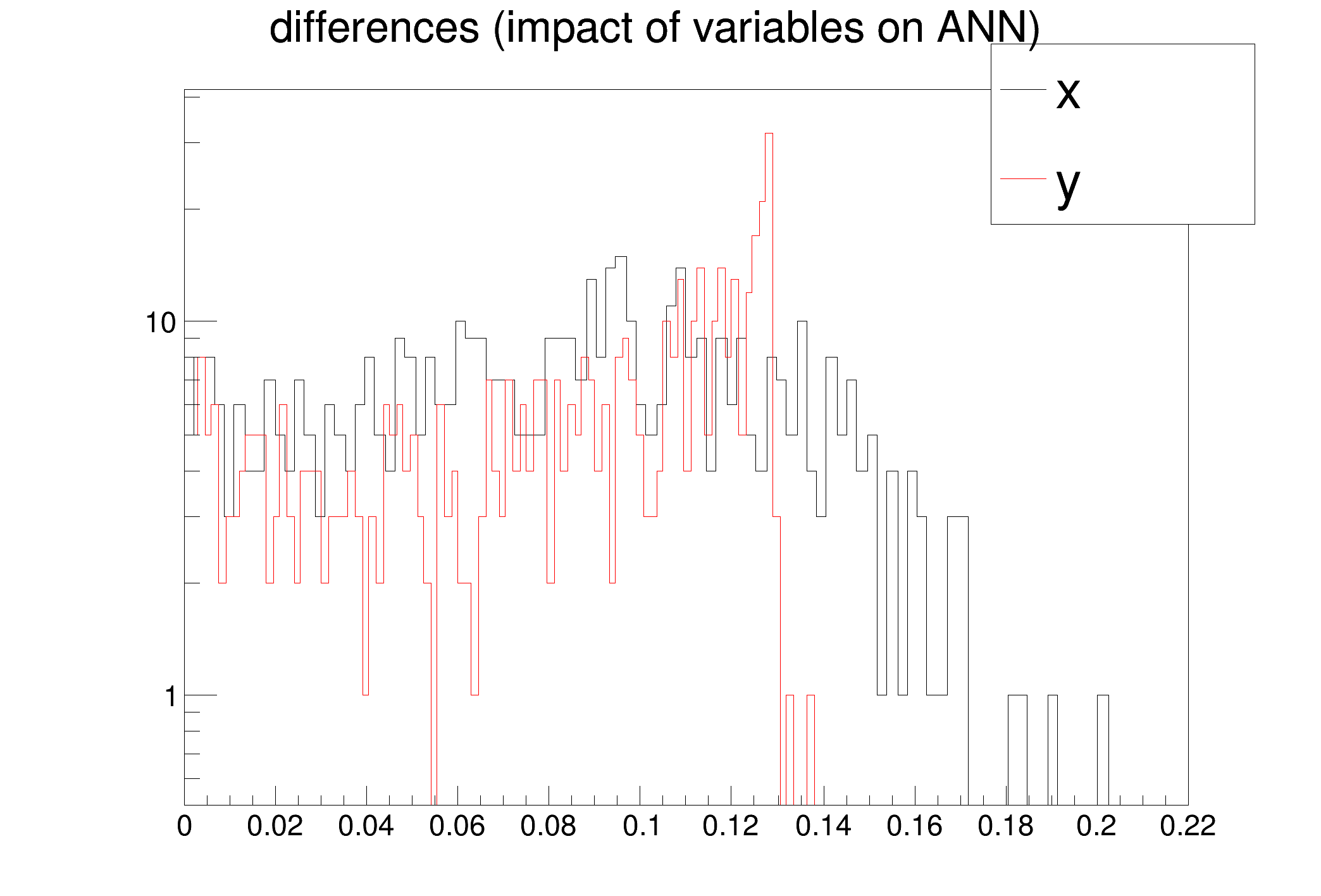

inputs with low values in the differences plot may not be needed

x -> 0.0837213 +/- 0.0429966

y -> 0.0818201 +/- 0.039177

return sin((1.7+

x)*(

x-0.3)-2.3*(

y+0.7));

}

void mlpRegression() {

for (

Int_t i=0; i<1000; i++) {

}

"Entry$%2","(Entry$%2)==0");

mlp->

Train(150,

"graph update=10");

mlpa->

GetIOTree()->

Draw(

"Out.Out0-True.True0:True.True0>>hDelta",

"",

"goff");

hDelta->

SetTitle(

"Difference between ANN output and truth vs. truth");

for (

Int_t ix=0; ix<15; ix++) {

for (

Int_t iy=0; iy<15; iy++) {

delta[idx]=mlp->

Evaluate(0,

v)-theUnknownFunction(

v[0],

v[1]);

}

}

"ANN extrapolation, ANN output - truth",

225, vx, vy, delta);

g2Extrapolate->

Draw(

"TRI2");

}

TVirtualPad * cd(Int_t subpadnumber=0)

Set current canvas & pad.

Graphics object made of three arrays X, Y and Z with the same number of points each.

virtual void Draw(Option_t *option="P0")

Specific drawing options can be used to paint a TGraph2D:

virtual void SetTitle(const char *title)

See GetStatOverflows for more information.

virtual void Draw(Option_t *option="")

Draw this histogram with options.

2-D histogram with a float per channel (see TH1 documentation)}

This utility class contains a set of tests usefull when developing a neural network.

void DrawDInputs()

Draws the distribution (on the test sample) of the impact on the network output of a small variation ...

THStack * DrawTruthDeviationInsOut(Int_t outnode=0, Option_t *option="")

Creates a profile of the difference of the MLP output outnode minus the true value of outnode vs the ...

void CheckNetwork()

Gives some information about the network in the terminal.

void GatherInformations()

Collect information about what is useful in the network.

THStack * DrawTruthDeviations(Option_t *option="")

Creates TProfiles of the difference of the MLP output minus the true value vs the true value,...

TTree * GetIOTree() const

This class describes a neural network.

Double_t Evaluate(Int_t index, Double_t *params) const

Returns the Neural Net for a given set of input parameters #parameters must equal #input neurons.

void Train(Int_t nEpoch, Option_t *option="text", Double_t minE=0)

Train the network.

A simple TTree restricted to a list of float variables only.

virtual Int_t Fill()

Fill a Ntuple with current values in fArgs.

virtual void Divide(Int_t nx=1, Int_t ny=1, Float_t xmargin=0.01, Float_t ymargin=0.01, Int_t color=0)

Automatic pad generation by division.

This is the base class for the ROOT Random number generators.

virtual void Draw(Option_t *opt)

Default Draw method for all objects.