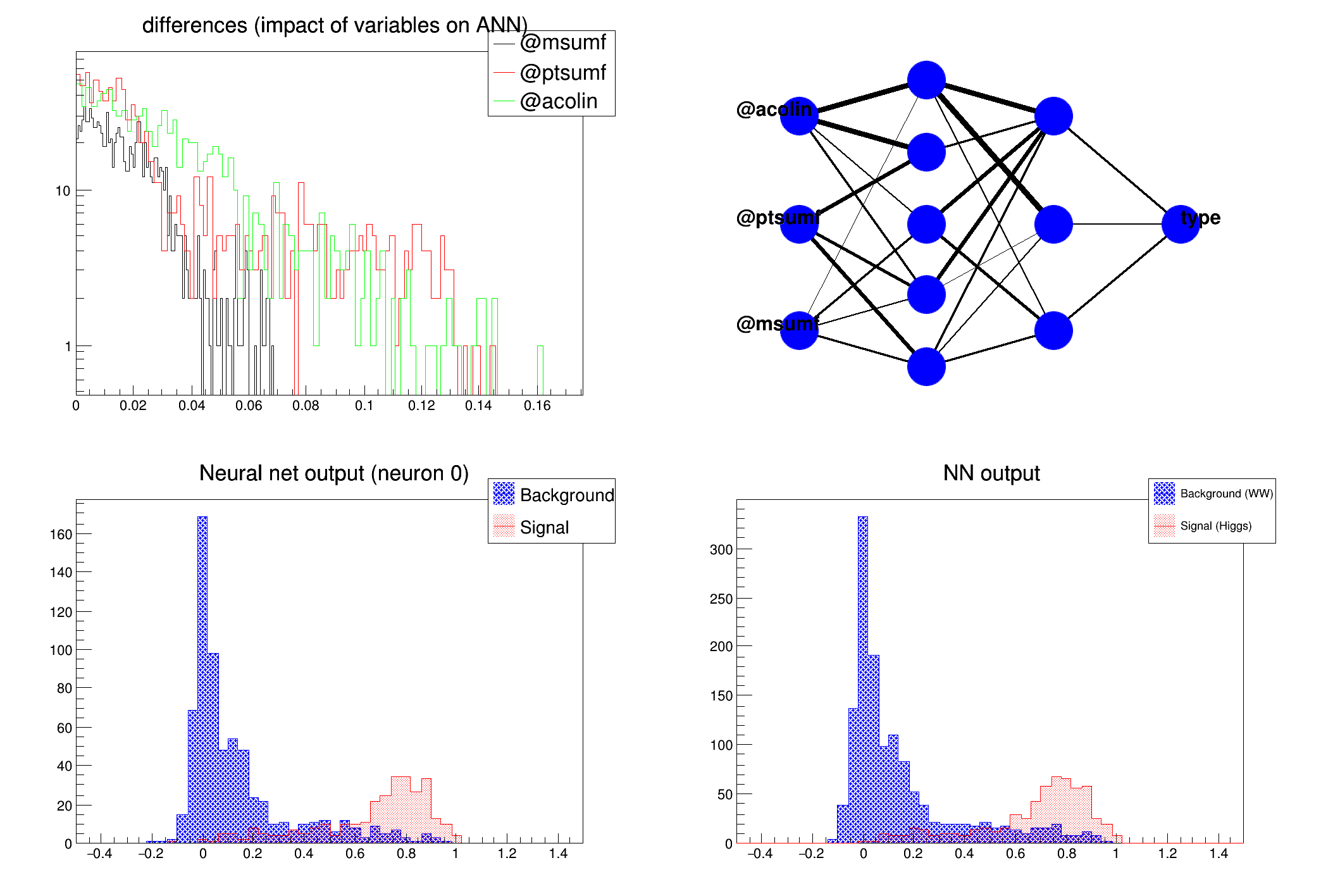

Here is a simplified version of this network, taking into account only WW events.

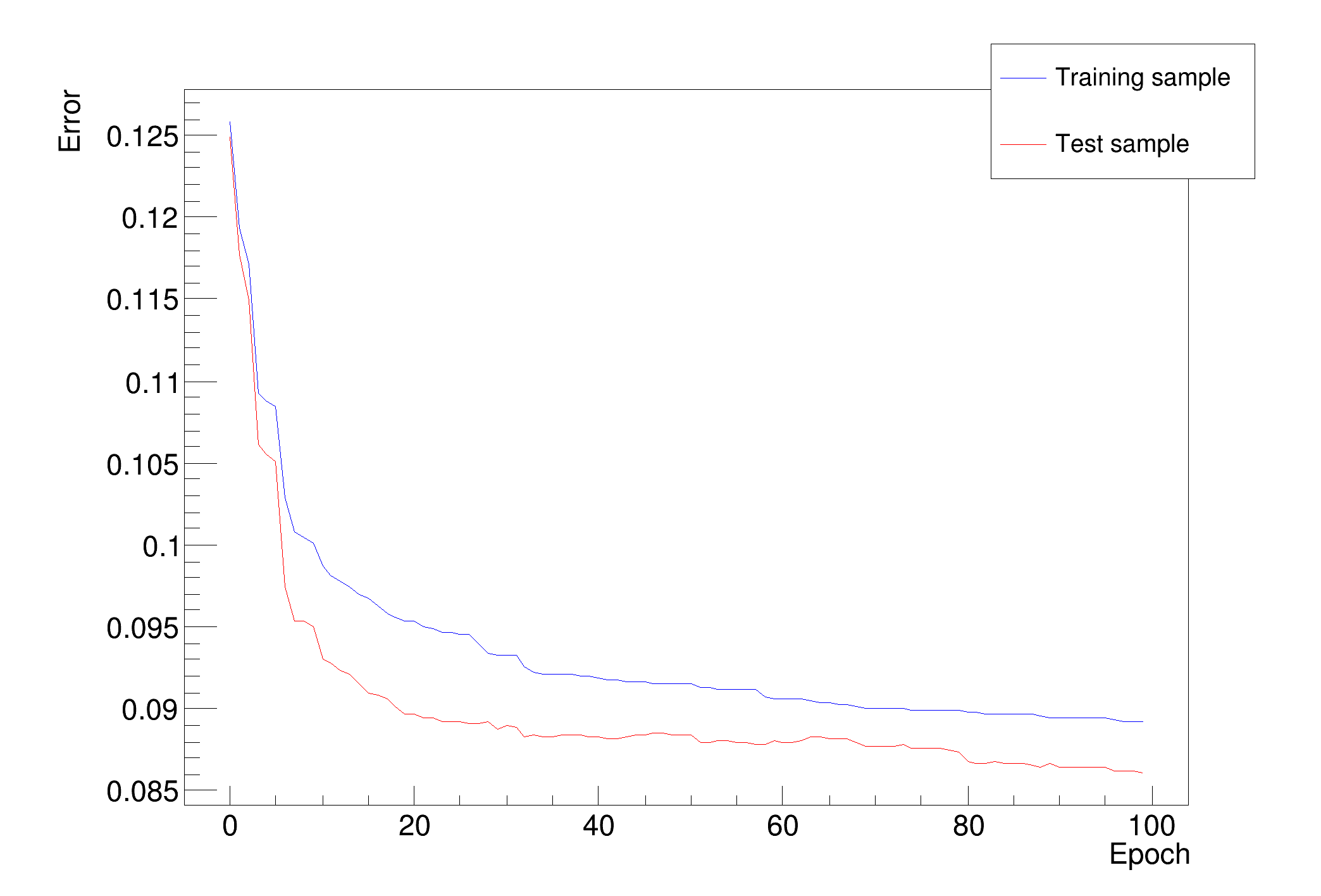

Training the Neural Network

Epoch: 0 learn=0.126967 test=0.12601

Epoch: 10 learn=0.100764 test=0.0966385

Epoch: 20 learn=0.0935478 test=0.0888721

Epoch: 30 learn=0.0931383 test=0.0887024

Epoch: 40 learn=0.0925155 test=0.0887499

Epoch: 50 learn=0.091979 test=0.088258

Epoch: 60 learn=0.091422 test=0.088178

Epoch: 70 learn=0.0909561 test=0.0872078

Epoch: 80 learn=0.0904172 test=0.087079

Epoch: 90 learn=0.090112 test=0.0869323

Epoch: 99 learn=0.0897705 test=0.0863691

Training done.

test.py created.

Network with structure: @msumf,@ptsumf,@acolin:5:3:type

inputs with low values in the differences plot may not be needed

@msumf -> 0.0151835 +/- 0.0164985

@ptsumf -> 0.0306631 +/- 0.0394206

@acolin -> 0.0260384 +/- 0.0271861

void mlpHiggs(

Int_t ntrain=100) {

const char *fname = "mlpHiggs.root";

} else {

printf("accessing %s file from http://root.cern.ch/files\n",fname);

}

TTree *simu =

new TTree(

"MonteCarlo",

"Filtered Monte Carlo Events");

Float_t ptsumf, qelep, nch, msumf, minvis, acopl, acolin;

simu->

Branch(

"ptsumf", &ptsumf,

"ptsumf/F");

simu->

Branch(

"qelep", &qelep,

"qelep/F");

simu->

Branch(

"nch", &nch,

"nch/F");

simu->

Branch(

"msumf", &msumf,

"msumf/F");

simu->

Branch(

"minvis", &minvis,

"minvis/F");

simu->

Branch(

"acopl", &acopl,

"acopl/F");

simu->

Branch(

"acolin", &acolin,

"acolin/F");

for (i = 0; i < sig_filtered->

GetEntries(); i++) {

}

}

"ptsumf",simu,"Entry$%2","(Entry$+1)%2");

mlp->

Train(ntrain,

"text,graph,update=10");

ana.GatherInformations();

ana.CheckNetwork();

ana.DrawDInputs();

ana.DrawNetwork(0,"type==1","type==0");

TH1F *bg =

new TH1F(

"bgh",

"NN output", 50, -.5, 1.5);

TH1F *sig =

new TH1F(

"sigh",

"NN output", 50, -.5, 1.5);

params[0] = msumf;

params[1] = ptsumf;

params[2] = acolin;

}

for (i = 0; i < sig_filtered->

GetEntries(); i++) {

params[0] = msumf;

params[1] = ptsumf;

params[2] = acolin;

}

legend->

AddEntry(bg,

"Background (WW)");

legend->

AddEntry(sig,

"Signal (Higgs)");

}

Option_t Option_t TPoint TPoint const char GetTextMagnitude GetFillStyle GetLineColor GetLineWidth GetMarkerStyle GetTextAlign GetTextColor GetTextSize void input

Option_t Option_t TPoint TPoint const char GetTextMagnitude GetFillStyle GetLineColor GetLineWidth GetMarkerStyle GetTextAlign GetTextColor GetTextSize void char Point_t Rectangle_t WindowAttributes_t Float_t Float_t Float_t Int_t Int_t UInt_t UInt_t Rectangle_t Int_t Int_t Window_t TString Int_t GCValues_t GetPrimarySelectionOwner GetDisplay GetScreen GetColormap GetNativeEvent const char const char dpyName wid window const char font_name cursor keysym reg const char only_if_exist regb h Point_t winding char text const char depth char const char Int_t count const char ColorStruct_t color const char Pixmap_t Pixmap_t PictureAttributes_t attr const char char ret_data h unsigned char height h Atom_t Int_t ULong_t ULong_t unsigned char prop_list Atom_t Atom_t Atom_t Time_t type

char * Form(const char *fmt,...)

Formats a string in a circular formatting buffer.

R__EXTERN TSystem * gSystem

virtual void SetFillColor(Color_t fcolor)

Set the fill area color.

virtual void SetFillStyle(Style_t fstyle)

Set the fill area style.

virtual void SetLineColor(Color_t lcolor)

Set the line color.

TVirtualPad * cd(Int_t subpadnumber=0) override

Set current canvas & pad.

A ROOT file is a suite of consecutive data records (TKey instances) with a well defined format.

static TFile * Open(const char *name, Option_t *option="", const char *ftitle="", Int_t compress=ROOT::RCompressionSetting::EDefaults::kUseCompiledDefault, Int_t netopt=0)

Create / open a file.

1-D histogram with a float per channel (see TH1 documentation)}

virtual void SetDirectory(TDirectory *dir)

By default, when a histogram is created, it is added to the list of histogram objects in the current ...

virtual Int_t Fill(Double_t x)

Increment bin with abscissa X by 1.

void Draw(Option_t *option="") override

Draw this histogram with options.

virtual void SetStats(Bool_t stats=kTRUE)

Set statistics option on/off.

This class displays a legend box (TPaveText) containing several legend entries.

TLegendEntry * AddEntry(const TObject *obj, const char *label="", Option_t *option="lpf")

Add a new entry to this legend.

void Draw(Option_t *option="") override

Draw this legend with its current attributes.

This utility class contains a set of tests usefull when developing a neural network.

This class describes a neural network.

Double_t Evaluate(Int_t index, Double_t *params) const

Returns the Neural Net for a given set of input parameters #parameters must equal #input neurons.

void Export(Option_t *filename="NNfunction", Option_t *language="C++") const

Exports the NN as a function for any non-ROOT-dependant code Supported languages are: only C++ ,...

void Train(Int_t nEpoch, Option_t *option="text", Double_t minE=0)

Train the network.

void Draw(Option_t *option="") override

Draws the network structure.

void Divide(Int_t nx=1, Int_t ny=1, Float_t xmargin=0.01, Float_t ymargin=0.01, Int_t color=0) override

Automatic pad generation by division.

static const TString & GetTutorialDir()

Get the tutorials directory in the installation. Static utility function.

virtual Bool_t AccessPathName(const char *path, EAccessMode mode=kFileExists)

Returns FALSE if one can access a file using the specified access mode.

A TTree represents a columnar dataset.

virtual Int_t Fill()

Fill all branches.

virtual Int_t GetEntry(Long64_t entry, Int_t getall=0)

Read all branches of entry and return total number of bytes read.

virtual Int_t SetBranchAddress(const char *bname, void *add, TBranch **ptr=nullptr)

Change branch address, dealing with clone trees properly.

virtual Long64_t GetEntries() const

TBranch * Branch(const char *name, T *obj, Int_t bufsize=32000, Int_t splitlevel=99)

Add a new branch, and infer the data type from the type of obj being passed.