Here is a simplified version of this network, taking into account only WW events.

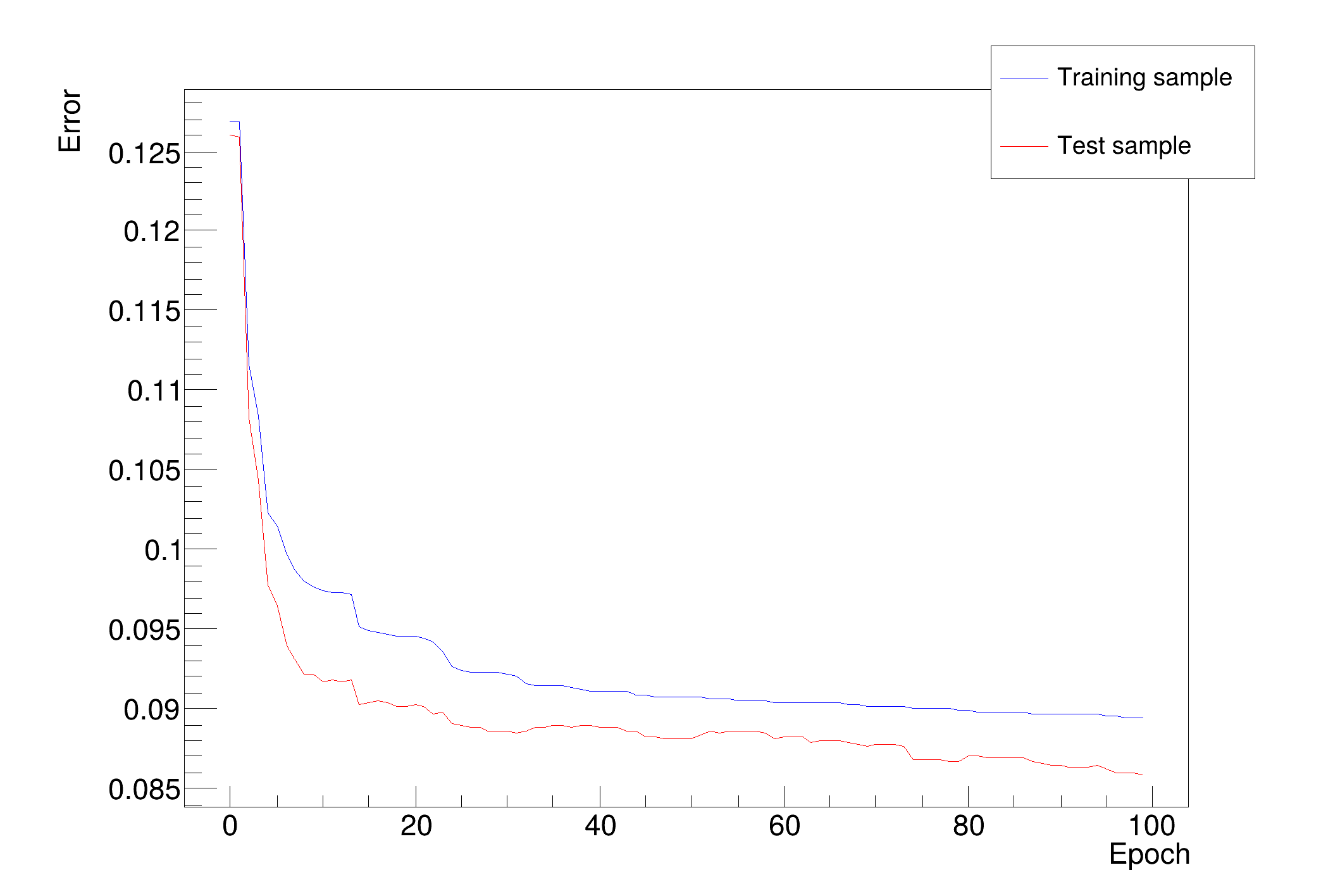

Training the Neural Network

Epoch: 0 learn=0.128023 test=0.127183

Epoch: 10 learn=0.116235 test=0.112177

Epoch: 20 learn=0.0980667 test=0.0919927

Epoch: 30 learn=0.0919145 test=0.0891968

Epoch: 40 learn=0.0912454 test=0.0886909

Epoch: 50 learn=0.0910373 test=0.0883706

Epoch: 60 learn=0.0905059 test=0.0878303

Epoch: 70 learn=0.0901137 test=0.0876918

Epoch: 80 learn=0.0898399 test=0.0864345

Epoch: 90 learn=0.0896016 test=0.0863277

Epoch: 99 learn=0.0893663 test=0.0864844

Training done.

test.py created.

Network with structure: @msumf,@ptsumf,@acolin:5:3:type

inputs with low values in the differences plot may not be needed

@msumf -> 0.0163246 +/- 0.0150538

@ptsumf -> 0.0300405 +/- 0.0411695

@acolin -> 0.0319584 +/- 0.0323052

const char *

fname =

"mlpHiggs.root";

} else {

printf(

"accessing %s file from http://root.cern/files\n",

fname);

}

simu->Branch(

"nch", &

nch,

"nch/F");

}

}

"ptsumf",

simu,

"Entry$%2",

"(Entry$+1)%2");

ana.GatherInformations();

ana.DrawNetwork(0,

"type==1",

"type==0");

TH1F *

bg =

new TH1F(

"bgh",

"NN output", 50, -.5, 1.5);

TH1F *sig =

new TH1F(

"sigh",

"NN output", 50, -.5, 1.5);

}

}

bg->SetFillStyle(3008);

bg->SetFillColor(

kBlue);

legend->AddEntry(

bg,

"Background (WW)");

legend->AddEntry(sig,

"Signal (Higgs)");

}

ROOT::Detail::TRangeCast< T, true > TRangeDynCast

TRangeDynCast is an adapter class that allows the typed iteration through a TCollection.

Option_t Option_t TPoint TPoint const char GetTextMagnitude GetFillStyle GetLineColor GetLineWidth GetMarkerStyle GetTextAlign GetTextColor GetTextSize void input

Option_t Option_t TPoint TPoint const char GetTextMagnitude GetFillStyle GetLineColor GetLineWidth GetMarkerStyle GetTextAlign GetTextColor GetTextSize void char Point_t Rectangle_t WindowAttributes_t Float_t Float_t Float_t Int_t Int_t UInt_t UInt_t Rectangle_t Int_t Int_t Window_t TString Int_t GCValues_t GetPrimarySelectionOwner GetDisplay GetScreen GetColormap GetNativeEvent const char const char dpyName wid window const char font_name cursor keysym reg const char only_if_exist regb h Point_t winding char text const char depth char const char Int_t count const char ColorStruct_t color const char Pixmap_t Pixmap_t PictureAttributes_t attr const char char ret_data h unsigned char height h Atom_t Int_t ULong_t ULong_t unsigned char prop_list Atom_t Atom_t Atom_t Time_t type

char * Form(const char *fmt,...)

Formats a string in a circular formatting buffer.

R__EXTERN TSystem * gSystem

virtual void SetFillColor(Color_t fcolor)

Set the fill area color.

virtual void SetFillStyle(Style_t fstyle)

Set the fill area style.

virtual void SetLineColor(Color_t lcolor)

Set the line color.

A ROOT file is an on-disk file, usually with extension .root, that stores objects in a file-system-li...

static TFile * Open(const char *name, Option_t *option="", const char *ftitle="", Int_t compress=ROOT::RCompressionSetting::EDefaults::kUseCompiledDefault, Int_t netopt=0)

Create / open a file.

1-D histogram with a float per channel (see TH1 documentation)

virtual void SetDirectory(TDirectory *dir)

By default, when a histogram is created, it is added to the list of histogram objects in the current ...

virtual Int_t Fill(Double_t x)

Increment bin with abscissa X by 1.

void Draw(Option_t *option="") override

Draw this histogram with options.

virtual void SetStats(Bool_t stats=kTRUE)

Set statistics option on/off.

This class displays a legend box (TPaveText) containing several legend entries.

This utility class contains a set of tests useful when developing a neural network.

This class describes a neural network.

Double_t Evaluate(Int_t index, Double_t *params) const

Returns the Neural Net for a given set of input parameters #parameters must equal #input neurons.

void Export(Option_t *filename="NNfunction", Option_t *language="C++") const

Exports the NN as a function for any non-ROOT-dependant code Supported languages are: only C++ ,...

void Train(Int_t nEpoch, Option_t *option="text", Double_t minE=0)

Train the network.

void Draw(Option_t *option="") override

Draws the network structure.

static const TString & GetTutorialDir()

Get the tutorials directory in the installation. Static utility function.

virtual Bool_t AccessPathName(const char *path, EAccessMode mode=kFileExists)

Returns FALSE if one can access a file using the specified access mode.

A TTree represents a columnar dataset.